The Rise of Explainable AI: Unraveling the Mysteries of Black Box Models

Last Updated: July 30, 2025 at 6:00:35 AM UTC

As AI systems increasingly rely on complex neural networks, the need for explainable AI has become a pressing concern, and researchers are working to develop techniques to unravel the mysteries of black box models.

The world of Artificial Intelligence has witnessed tremendous growth and progress in recent years, with neural networks playing a significant role in this journey. However, the increasing complexity of these models has raised concerns about their interpretability, leading to a pressing need for Explainable AI (XAI). In this blog post, we will delve into the world of XAI, exploring its significance, challenges, and potential solutions.

What is Explainable AI?

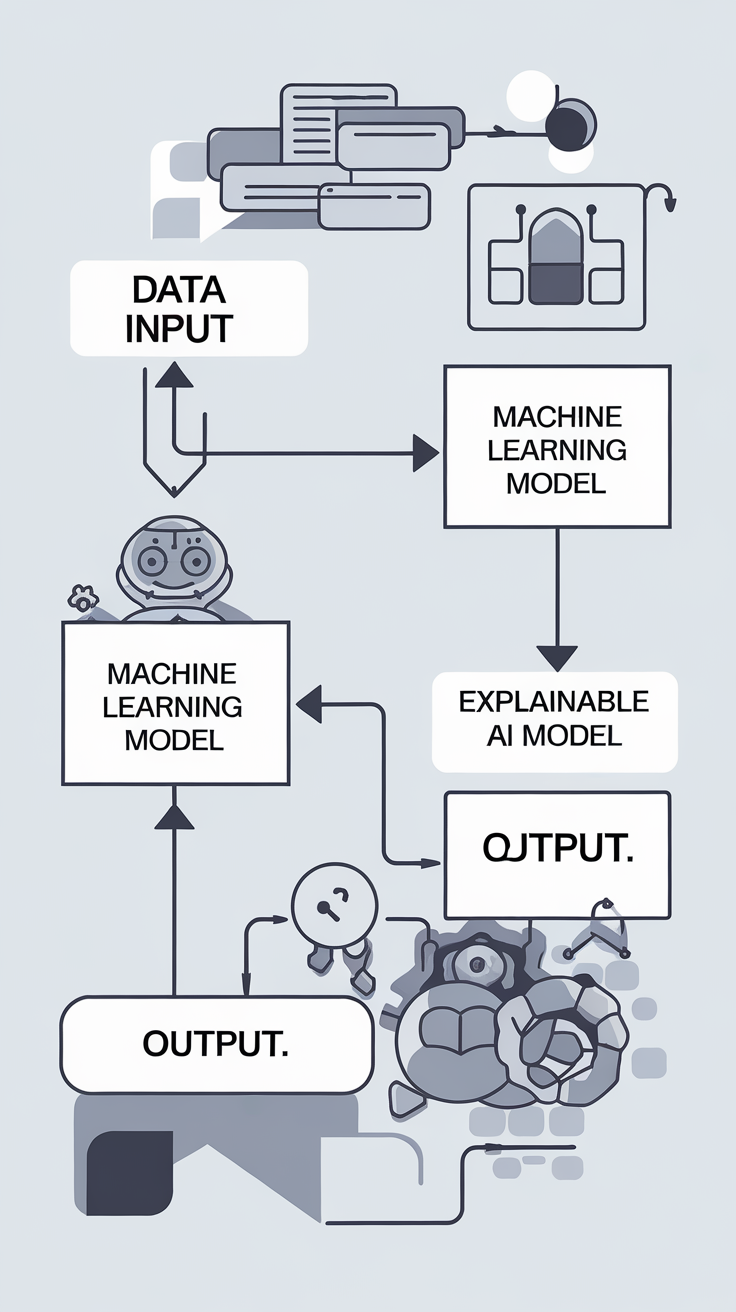

Explainable AI refers to the ability of AI systems to provide transparent and interpretable insights into their decision-making processes. This involves understanding how the model arrived at a particular conclusion, what features it used, and what weights it assigned to each feature. In other words, XAI aims to demystify the "black box" nature of complex neural networks, making it possible for humans to understand and trust the decisions made by these systems.

Why is Explainable AI Important?

The importance of XAI cannot be overstated. In today's data-driven world, AI systems are being increasingly used in high-stakes applications, such as healthcare, finance, and transportation. However, the lack of transparency and interpretability in these systems can lead to unintended consequences, such as:

- Lack of trust: When users don't understand how the model arrived at a particular conclusion, they may not trust the results.

- Bias: Complex models can inadvertently amplify biases present in the training data, leading to unfair outcomes.

- Legal and regulatory issues: The lack of transparency in AI decision-making can lead to legal and regulatory challenges.

Challenges in Explainable AI

While XAI is crucial, it is not without its challenges. Some of the key challenges include:

- Complexity: Complex neural networks can be difficult to interpret, even for experts.

- Limited data: The availability of high-quality training data is often limited, making it challenging to develop accurate and interpretable models.

- Computational resources: XAI techniques often require significant computational resources, which can be a challenge for smaller organizations or those with limited budgets.

Techniques for Explainable AI

Several techniques have been developed to achieve XAI, including:

- Model-agnostic explanations: These techniques can be applied to any machine learning model, regardless of its architecture or complexity.

- Model-specific explanations: These techniques are tailored to specific models, such as linear models or decision trees.

- Visualization: Visualizing the decision-making process can help users understand how the model arrived at a particular conclusion.

- Feature importance: Identifying the most important features used by the model can provide insights into its decision-making process.

Conclusion/Key Takeaways

Explainable AI is a pressing concern in today's AI landscape, as complex neural networks are increasingly being used in high-stakes applications. While XAI is not without its challenges, several techniques have been developed to achieve transparency and interpretability in AI decision-making. By understanding the importance of XAI, its challenges, and the techniques available, we can work towards developing more trustworthy and transparent AI systems.