The Emergence of Explainable AI: Unlocking Transparency and Trust in Machine Learning

Last Updated: July 4, 2025 at 6:01:04 AM UTC

As AI systems become increasingly pervasive, the need for transparency and trust has never been more pressing. Explainable AI (XAI) is the answer, providing insights into the decision-making processes of complex machine learning models.

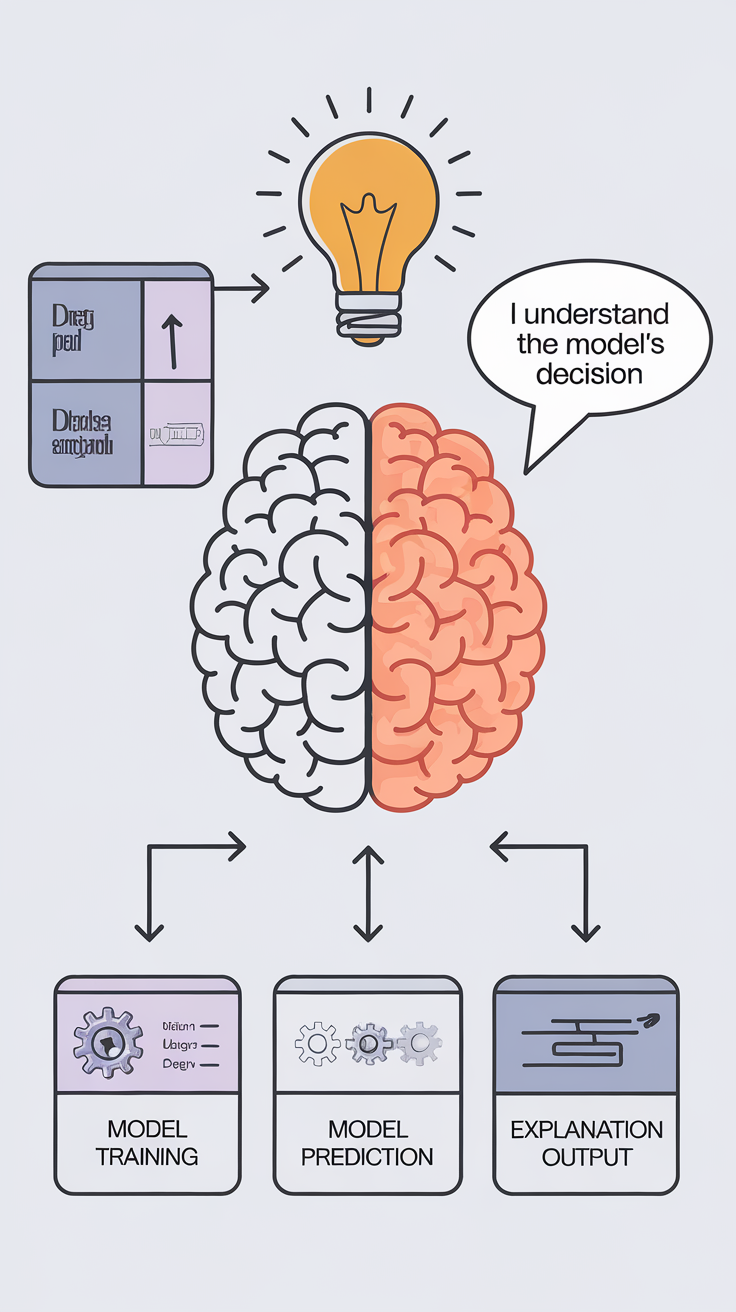

The field of Artificial Intelligence has made tremendous progress in recent years, with machine learning (ML) models achieving impressive accuracy in a wide range of applications. However, as AI systems become increasingly pervasive in our daily lives, the need for transparency and trust has never been more pressing. This is where Explainable AI (XAI) comes in – a rapidly evolving field that aims to provide insights into the decision-making processes of complex ML models.

What is Explainable AI?

XAI is an approach to building AI systems that can explain their decisions and actions to users. This involves developing ML models that provide transparent and interpretable results, making it possible for humans to understand why a particular decision was made. In other words, XAI enables humans to "see inside" the black box of AI and understand how it arrived at its conclusions.

Why is Explainability Important?

Explainability is crucial in several areas:

- Trust: When AI systems can explain their decisions, users are more likely to trust the results and accept the recommendations.

- Transparency: XAI provides a window into the decision-making process, allowing users to identify potential biases and errors.

- Accountability: With explainable AI, it is possible to hold AI systems accountable for their actions, which is essential in high-stakes applications like healthcare, finance, and law enforcement.

- Debugging: XAI enables developers to identify and fix errors in ML models, leading to more accurate and reliable results.

How is Explainable AI Achieved?

There are several approaches to building XAI systems:

- Model-agnostic techniques: These methods can be applied to any ML model, regardless of its architecture or type.

- Model-specific techniques: These approaches are tailored to specific ML models, such as decision trees or neural networks.

- Hybrid approaches: These combine multiple techniques to achieve better explainability.

Challenges and Limitations

While XAI holds great promise, there are several challenges and limitations to consider:

- Complexity: XAI systems can be complex and difficult to interpret, especially for non-technical users.

- Scalability: As ML models become larger and more complex, explainability can become increasingly challenging.

- Evaluation: Developing effective evaluation metrics for XAI systems is an ongoing challenge.

Conclusion/Key Takeaways

Explainable AI is a crucial development in the field of Artificial Intelligence, providing transparency and trust in complex ML models. By unlocking the black box of AI, XAI enables humans to understand the decision-making processes of AI systems, leading to improved trust, accountability, and reliability. As the field of XAI continues to evolve, we can expect to see significant advancements in AI adoption and deployment in various industries.