The Rise of Explainable AI: Unlocking Trust and Transparency in Machine Learning

Last Updated: July 15, 2025 at 6:00:35 AM UTC

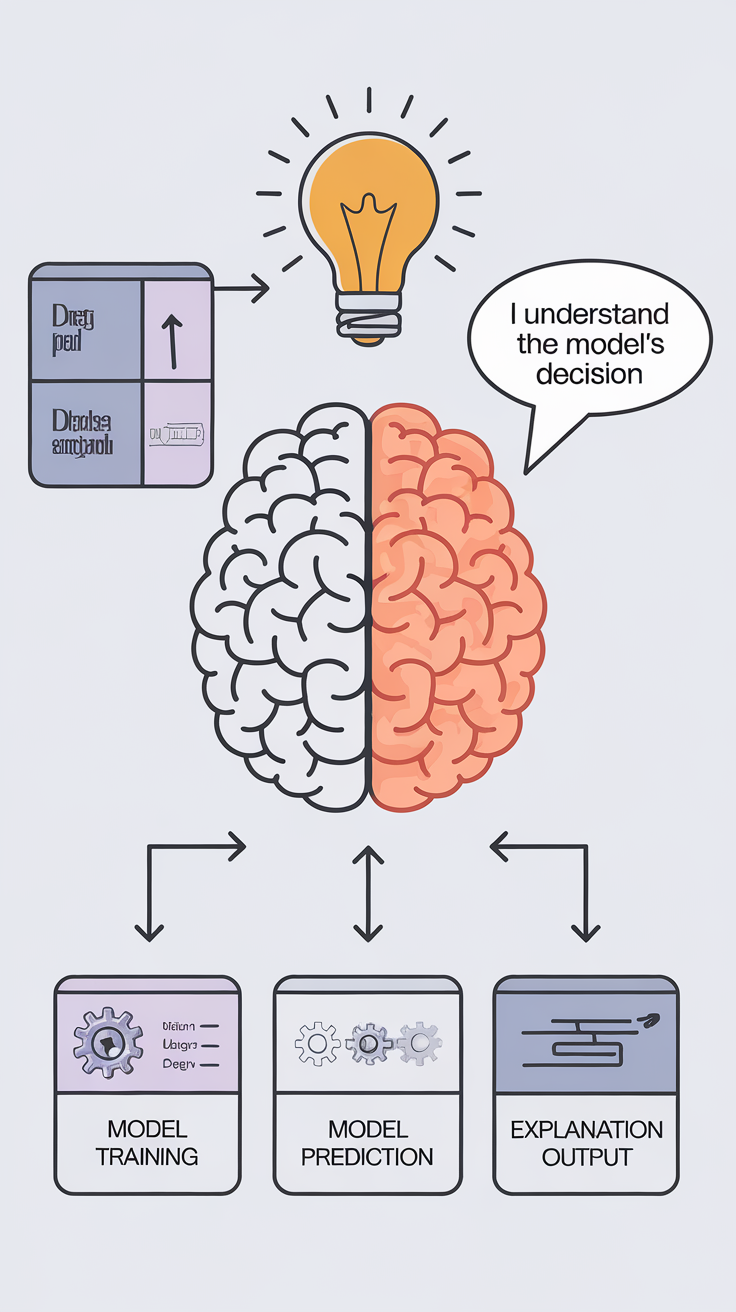

Explainable AI (XAI) is transforming the AI landscape by providing insights into model decisions, enabling more informed decision-making, and fostering trust in AI-driven systems.

The artificial intelligence (AI) industry has made tremendous progress in recent years, with deep learning models achieving state-of-the-art performance in various applications. However, as AI becomes increasingly pervasive in our lives, there is a growing need for transparency and explainability in AI-driven decision-making. This is where Explainable AI (XAI) comes in – a subfield of AI research focused on making AI models more interpretable and transparent.

What is Explainable AI?

XAI is an umbrella term that encompasses various techniques aimed at providing insights into AI model decisions. The primary goal of XAI is to make AI systems more trustworthy by enabling humans to understand how and why AI models make certain decisions. This is particularly important in high-stakes applications, such as healthcare, finance, and transportation, where AI-driven decisions can have significant consequences.

Why is Explainable AI Important?

XAI is essential for building trust in AI-driven systems. Without transparency, AI models can be perceived as opaque and unpredictable, leading to concerns about fairness, accountability, and reliability. XAI addresses these concerns by providing insights into model decisions, enabling humans to:

- Identify biases and errors

- Understand the reasoning behind AI-driven decisions

- Develop more robust and accurate AI models

- Improve communication between humans and AI systems

Techniques for Explainable AI

Several techniques are used to achieve XAI, including:

- Model interpretability: Techniques like feature importance, partial dependence plots, and SHAP values help understand how individual features contribute to model predictions.

- Attention mechanisms: These mechanisms highlight the most relevant input features or tokens that influence model decisions.

- Model-agnostic explanations: Techniques like LIME (Local Interpretable Model-agnostic Explanations) and TreeExplainer provide explanations for any machine learning model, regardless of its architecture or complexity.

- Human-in-the-loop: This approach involves involving humans in the AI decision-making process to provide additional context, correct errors, and improve model performance.

Applications of Explainable AI

XAI has numerous applications across various industries, including:

- Healthcare: XAI can help doctors understand AI-driven diagnoses and treatment recommendations, improving patient outcomes and reducing medical errors.

- Finance: XAI can provide insights into AI-driven investment decisions, enabling investors to make more informed choices and reducing the risk of financial losses.

- Transportation: XAI can improve the safety and reliability of autonomous vehicles by providing insights into AI-driven decision-making and reducing the risk of accidents.

Conclusion/Key Takeaways

Explainable AI is a critical component of the AI landscape, enabling transparency and trust in AI-driven decision-making. By providing insights into model decisions, XAI can improve the reliability, accountability, and fairness of AI systems. As AI continues to transform various industries, XAI will play a vital role in building trust and confidence in AI-driven systems.