The Rise of Explainable AI: Unraveling the Mysteries of Machine Learning

Last Updated: July 29, 2025 at 6:00:35 AM UTC

As AI becomes increasingly pervasive in our daily lives, the demand for transparency and accountability in machine learning has grown. Explainable AI (XAI) is the solution, empowering developers to create more trustworthy and transparent models.

The world is rapidly embracing Artificial Intelligence (AI), and with it, the need for transparency and accountability in machine learning has grown. As AI systems become increasingly complex and pervasive, it's essential to understand how they make decisions, especially when it comes to high-stakes applications like finance, healthcare, and law enforcement. Enter Explainable AI (XAI), a burgeoning field that aims to unravel the mysteries of machine learning, making AI more transparent, trustworthy, and ultimately, more human-like.

What is Explainable AI?

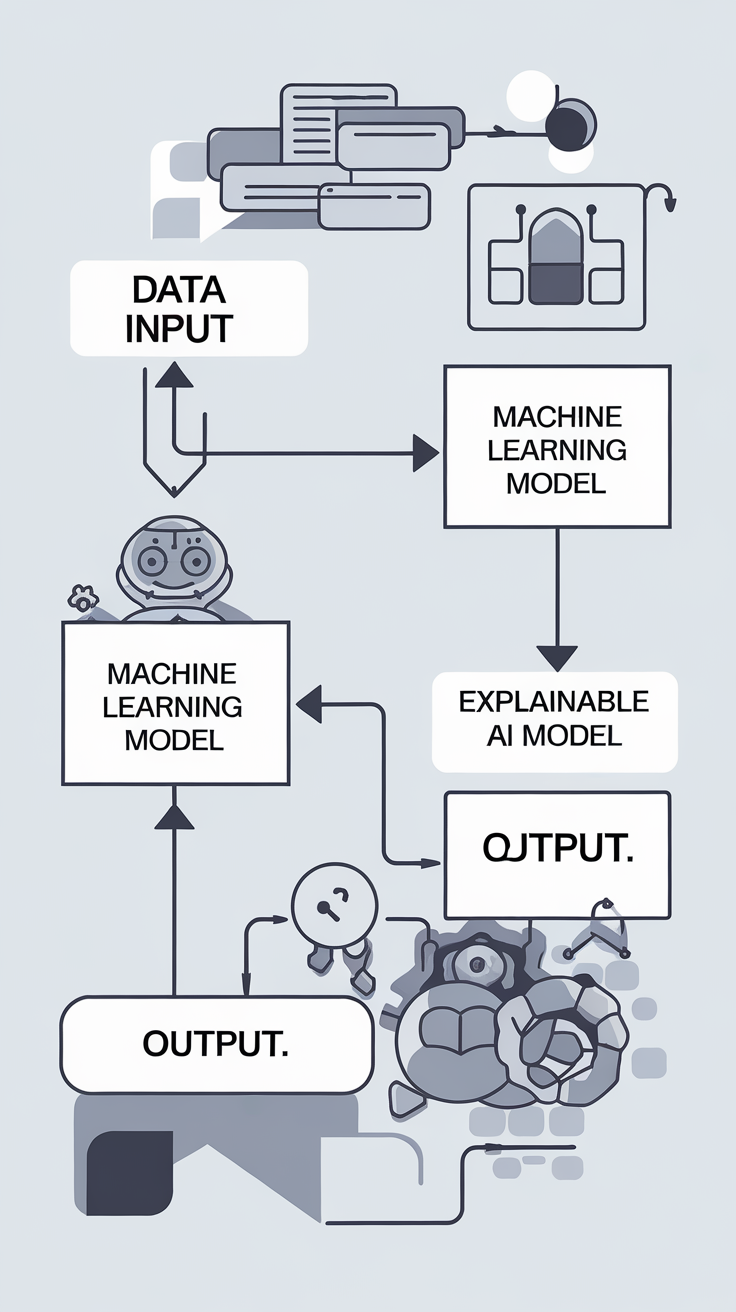

XAI is an approach to artificial intelligence that focuses on developing models that provide clear, concise, and actionable explanations for their decisions. This means that AI systems can not only make predictions or classify data but also explain the reasoning behind those decisions. XAI is an essential component of trustworthy AI, as it enables developers to identify biases, improve model performance, and increase user trust.

Why is Explainable AI Important?

The importance of XAI lies in its ability to address the growing concerns around AI transparency and accountability. With XAI, developers can:

- Identify Biases: XAI helps to identify biases in AI models, ensuring that they're fair and unbiased.

- Improve Model Performance: By understanding how AI models make decisions, developers can refine them to achieve better results.

- Increase User Trust: XAI fosters transparency, allowing users to understand the reasoning behind AI-driven decisions.

- Comply with Regulations: XAI is essential for meeting regulatory requirements, such as GDPR and EU's AI Act, which emphasize transparency and accountability in AI development.

How is Explainable AI Achieved?

XAI is achieved through various techniques, including:

- Model Interpretability: Techniques like feature importance, partial dependence plots, and SHAP values help to understand how models make decisions.

- Explainable Models: Models like LIME (Local Interpretable Model-agnostic Explanations) and TreeExplainer provide explanations for their predictions.

- Human-in-the-Loop: Human oversight and feedback are used to improve model performance and explainability.

- Adversarial Examples: Techniques like adversarial attacks and adversarial training help to identify and mitigate biases in AI models.

Challenges and Opportunities

While XAI presents numerous benefits, it also poses challenges:

- Complexity: XAI requires significant computational resources and expertise to implement.

- Interpretability Limitations: Not all models can be fully explained, and some may require simplification or approximation.

- Cost: Developing XAI models can be resource-intensive, requiring significant investments in time and money.

However, the opportunities presented by XAI far outweigh the challenges:

- Improved AI Development: XAI enables developers to create more transparent and trustworthy AI models.

- Increased User Trust: XAI fosters transparency, leading to increased user trust and adoption.

- Regulatory Compliance: XAI helps organizations meet regulatory requirements, reducing the risk of non-compliance.

Conclusion/Key Takeaways

Explainable AI is a crucial step towards creating more trustworthy and transparent AI systems. By providing clear explanations for AI-driven decisions, XAI empowers developers to build more responsible AI models that can be used in high-stakes applications. As the demand for transparency and accountability in AI continues to grow, XAI is poised to play a vital role in shaping the future of machine learning.