The Rise of Explainable AI: Unlocking Transparency and Trust in AI Decision-Making

Last Updated: July 9, 2025 at 6:00:54 AM UTC

As AI increasingly permeates critical decision-making processes, explainable AI (XAI) is emerging as a vital solution, enabling humans to comprehend AI-driven outcomes and build trust in AI systems.

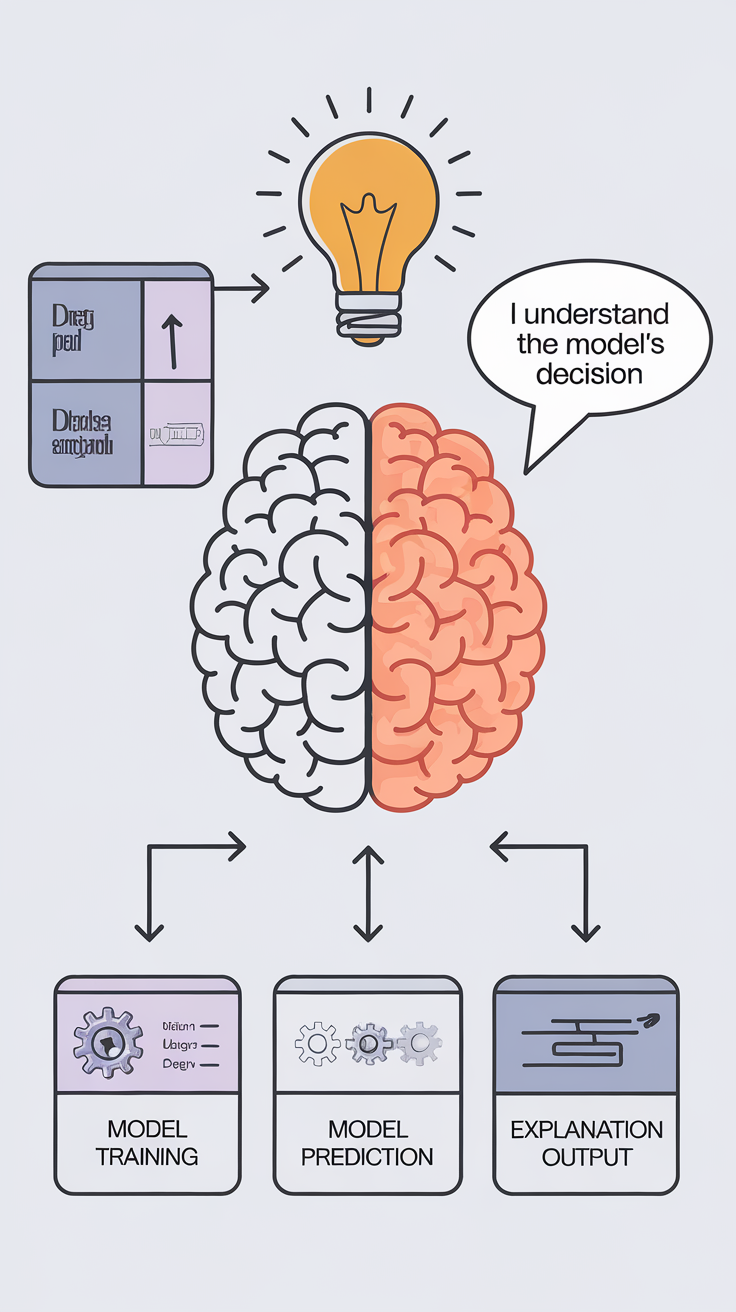

The rapid adoption of Artificial Intelligence (AI) across industries has led to a pressing need for transparency and trust in AI decision-making processes. As AI models become increasingly complex and opaque, it's becoming essential to understand how they arrive at their conclusions. This is where Explainable AI (XAI) comes in – a rapidly growing field that aims to provide insights into AI-driven decisions, making them more transparent, accountable, and trustworthy.

The Case for Explainable AI

The proliferation of AI in high-stakes decision-making, such as healthcare, finance, and law enforcement, has raised concerns about accountability and transparency. As AI systems become more autonomous, it's crucial to ensure that they are making fair, unbiased, and just decisions. XAI addresses this challenge by providing a clear understanding of AI-driven outcomes, enabling humans to:

- Identify biases: Uncover potential biases in AI models and address them proactively.

- Understand decision-making: Gain insights into the reasoning behind AI-driven decisions, improving trust and accountability.

- Improve performance: Fine-tune AI models based on human feedback and understanding, leading to better performance and accuracy.

Techniques for Explainable AI

Several techniques are being developed to achieve XAI, including:

- Model interpretability: Techniques like feature importance, partial dependence plots, and SHAP values help explain how AI models arrive at their conclusions.

- Attention mechanisms: By highlighting relevant input features and weights, attention mechanisms provide insights into AI-driven decision-making.

- Model-agnostic explanations: Methods like LIME (Local Interpretable Model-agnostic Explanations) and TreeExplainer offer model-agnostic explanations, making them compatible with various AI models.

- Explainable reinforcement learning: This approach focuses on explaining the decision-making process in reinforcement learning environments, where AI agents interact with complex environments.

Applications of Explainable AI

XAI has far-reaching implications across various industries, including:

- Healthcare: Explainable AI can help medical professionals understand AI-driven diagnoses and treatment recommendations, improving patient outcomes and trust.

- Finance: XAI can provide transparency into AI-driven investment decisions, enabling investors to make more informed choices.

- Law Enforcement: Explainable AI can help ensure fairness and accountability in AI-driven policing and crime prediction, reducing the risk of biased decisions.

Challenges and Future Directions

While XAI has made significant progress, several challenges remain:

- Model complexity: Complex AI models can be difficult to interpret, requiring innovative techniques to provide meaningful explanations.

- Data quality: High-quality training data is essential for developing accurate and trustworthy XAI models.

- Regulatory frameworks: Developing regulatory frameworks that address XAI's unique challenges will be crucial for widespread adoption.

Conclusion/Key Takeaways

Explainable AI is a critical step towards building trust in AI decision-making processes. By providing transparency and accountability, XAI can unlock the full potential of AI, enabling humans to work in harmony with machines. As the field continues to evolve, it's essential to address the challenges and complexities surrounding XAI, ensuring that this technology benefits society as a whole.

The future of AI depends on our ability to understand and trust the decisions made by machines. Explainable AI is the key to unlocking this trust and building a more transparent and accountable AI ecosystem.