The Evolution of Explainable AI: Unraveling the Mysteries of Black Box Models

Last Updated: July 23, 2025 at 6:00:57 AM UTC

As AI models become increasingly complex, the need for explainable AI grows more pressing. This post delves into the latest advancements in explainable AI and their potential impact on the industry.

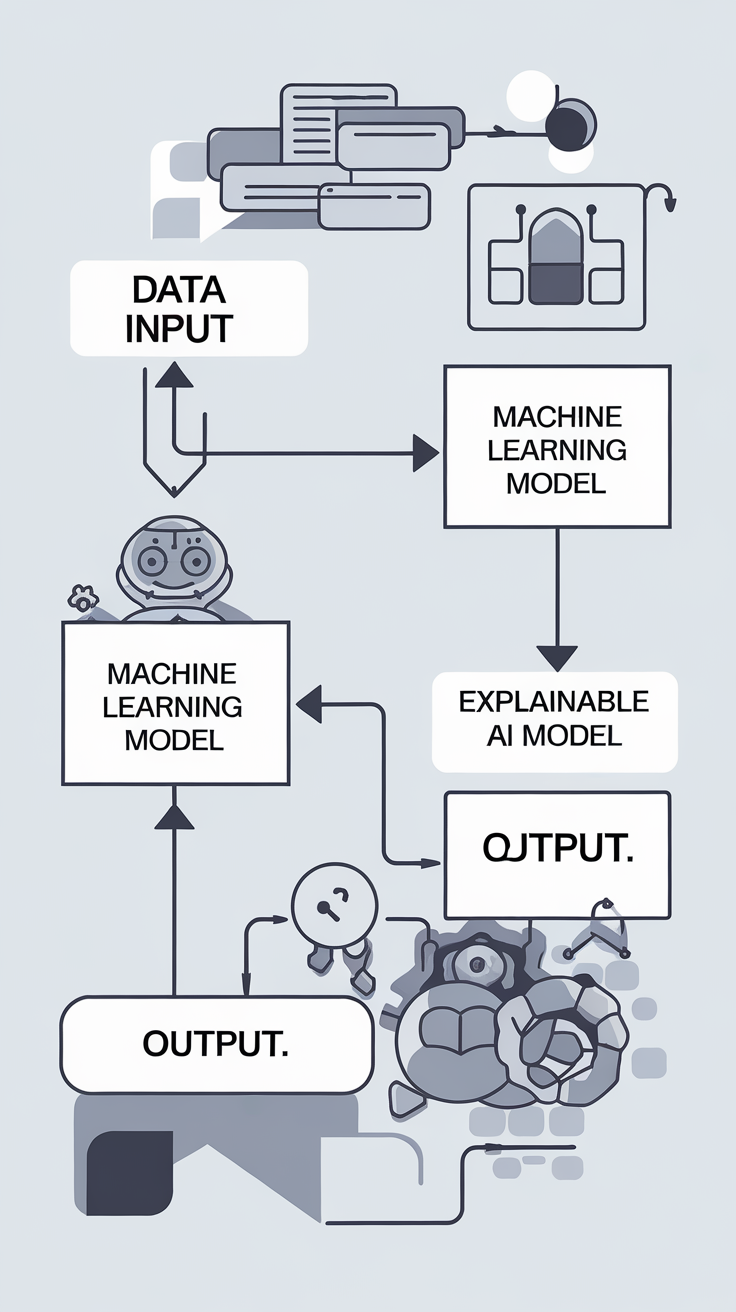

The development of artificial intelligence (AI) has led to the creation of complex models that are capable of making predictions and decisions with remarkable accuracy. However, the lack of transparency and interpretability in these models has raised concerns about their trustworthiness and accountability. This is where explainable AI (XAI) comes in – a field that aims to make AI models more transparent and interpretable.

The Rise of Black Box Models

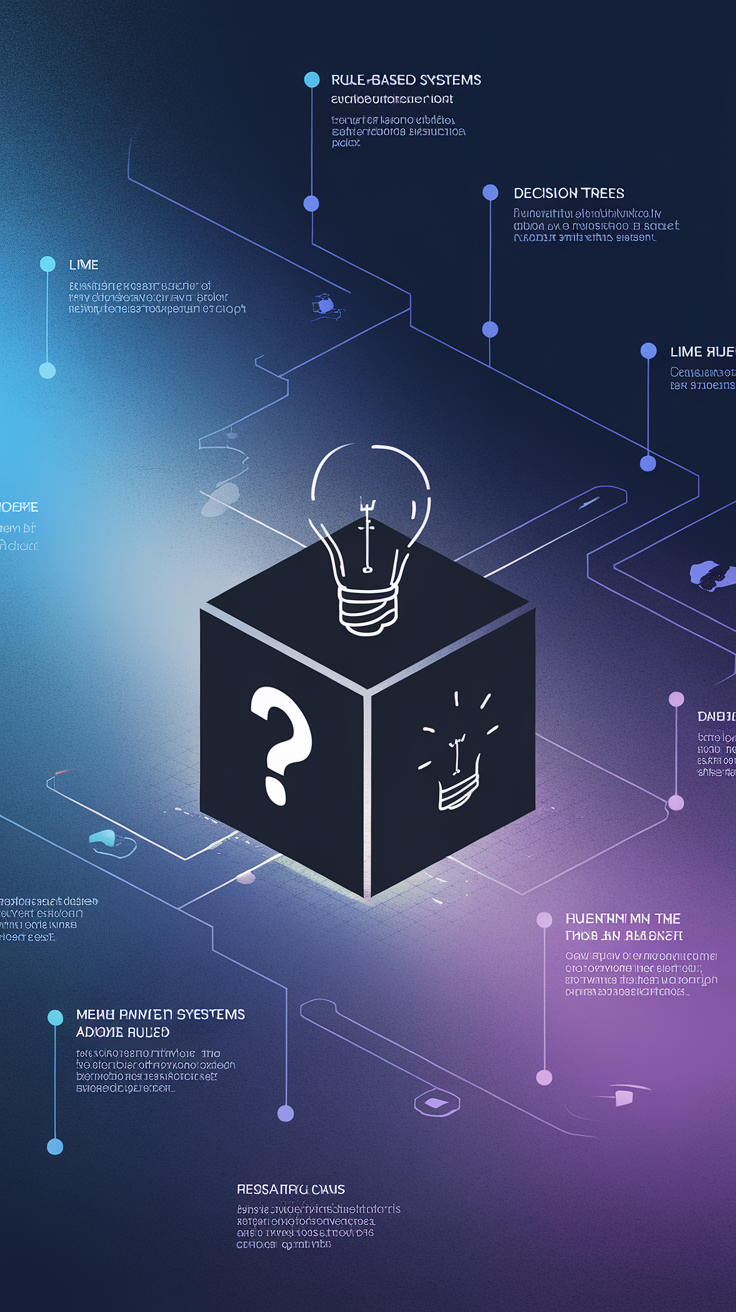

In recent years, the development of deep neural networks has led to the creation of complex AI models that are capable of processing vast amounts of data and making accurate predictions. However, these models are often referred to as "black box" models because their decision-making processes are difficult to understand and interpret.

Black box models are often created using complex algorithms and techniques, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs). These models are trained on large datasets and are capable of learning complex patterns and relationships. However, the lack of transparency and interpretability in these models makes it difficult to understand how they arrive at their predictions and decisions.

The Need for Explainable AI

The lack of transparency and interpretability in AI models has raised concerns about their trustworthiness and accountability. In critical applications, such as healthcare and finance, the lack of understanding of how AI models arrive at their decisions can have serious consequences.

Explainable AI (XAI) is a field that aims to make AI models more transparent and interpretable. XAI involves the development of techniques and methods that can help explain the decision-making processes of AI models. This includes techniques such as model interpretability, feature attribution, and visualizations.

Advances in Explainable AI

In recent years, there have been significant advances in the field of XAI. One of the key developments has been the creation of techniques that can help explain the decision-making processes of AI models. These techniques include:

- Model interpretability: This involves the development of techniques that can help understand how AI models arrive at their predictions and decisions.

- Feature attribution: This involves the development of techniques that can help understand which features of the input data are most important for the AI model's predictions and decisions.

- Visualizations: This involves the development of techniques that can help visualize the decision-making processes of AI models.

These techniques have been shown to be effective in a range of applications, including image classification, natural language processing, and recommender systems.

Challenges and Limitations

Despite the advances in XAI, there are still significant challenges and limitations to the field. One of the key challenges is the complexity of AI models, which can make it difficult to understand how they arrive at their predictions and decisions.

Another challenge is the need for large amounts of data to train AI models, which can make it difficult to obtain the data needed for XAI. Additionally, there are concerns about the potential biases and errors in AI models, which can make it difficult to trust their decisions.

Conclusion/Key Takeaways

Explainable AI is a critical field that aims to make AI models more transparent and interpretable. The advances in XAI have significant implications for the development of AI systems, as they can help improve the trustworthiness and accountability of AI models.

However, there are still significant challenges and limitations to the field, including the complexity of AI models and the need for large amounts of data. Despite these challenges, the potential benefits of XAI make it an exciting and rapidly evolving field that is likely to have a significant impact on the development of AI systems in the future.

The evolution of explainable AI is a critical step towards making AI more trustworthy and accountable. As the field continues to evolve, we can expect to see significant advances in the development of AI systems.